-

Notifications

You must be signed in to change notification settings - Fork 7.6k

Does this repo's implementation of maskrcnn work with negative samples? #80

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

We allow input images to have no objects, if that's what you mean. However, the default data loader filter such images at detectron2/detectron2/data/build.py Lines 319 to 326 in cea5934

If you need to keep them, you need to write a custom data loader |

|

So if I wrote a data loader that accomplishes the same thing as setting |

|

I guess I'm wondering what the rationale is to filter out empty annotations by default, is this because some segmentation models like MaskRCNN can't make use of negative samples? |

There shouldn't be. But we don't normally do this, so there might be things we are not aware of. If you found any issues please report to us.

Mainly because that's the tradition I think. |

|

IIRC, the filtering was first added in detectron (v1) when we implemented keypoint prediction in Mask R-CNN. In that case only images that contain people annotated with keypoints are useful for training and this leaves a large number of images without positive examples. We found that training was faster and keypoint AP was no worse if the images without positives were filtered out. Since it had little impact on the usual COCO training data, filtering of these images was left on as the default. Detectron2 has inherited this choice, but in retrospect the design decision is a bit overfit to considerations of the COCO dataset. @ppwwyyxx -- perhaps we should consider making this filtering configurable? |

|

Thanks both of you for the explanations. IMO then, the option should probably be configurable by default, since it's a bit of an unexpected and hidden behavior for folks looking to use Detectron2 on their own custom datasets. I'm happy to make a PR to make empty filtering a |

|

@rbavery -- sounds good, a PR is welcome! |

|

added in 62522b6. |

Hi, I'm using a custom dataset with torch DataLoader. File "/home/edwardchaos/detectron2_repo/detectron2/modeling/roi_heads/fast_rcnn.py", line 173, in _log_accuracy If I add a check to skip empty prediction in _log_accuracy function of fast_rcnn.py: num_instances = self.gt_classes.numel() It then fails at: |

|

negative samples should not make |

|

I found this comment in fast_rcnn.py: proposals (list[Instances]): A list of N Instances, where Instances i stores the Maybe mask rcnn accepts negative samples, i'll just use sample with gt labels for my purposes. |

|

I'm working on project based on Torchvision where negative samples also cause problems. Since Torchvision currently don't support them, I'm wondering if it would be worth switching to Detectron2. As of today, what is the answer to this issue's title: "Does this repo's implementation of maskrcnn work with negative samples?" What about faster-rcnn? I understand a data loader option has been added, but it's still unclear if the models support negative samples. |

|

Images without annotations are supported in R-CNN. |

|

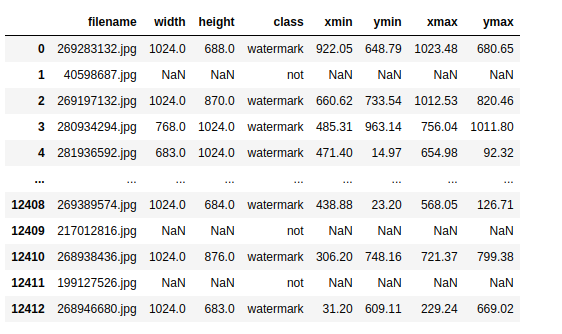

Hi, maybe someone can help me. I try to implement this process https://github.com/TannerGilbert/Microcontroller-Detection-with Detectron2/blob/master/Detectron2_Detect_Microcontrollers.ipynb with some modification, in which I try to train model without filtering empty annotations, for that, I modify the value of this variable DATALOADER.FILTER_EMPTY_ANNOTATIONS = False , my dataset looks like cfg = get_cfg() os.makedirs(cfg.OUTPUT_DIR, exist_ok=True) but my training process crushed with: |

|

It does not allow boxes with NaNs. |

I understand it, but how I can train the model with negative samples? How can I use the images which haven't boxes? If you can explain I would be very grateful. |

|

You can train with an image whose "annotations" is an empty list. |

|

Thanks, I'll try. |

|

I still got the same issue. As I understand it, I don't need to clip boxes in boxes.clip(image_size) function, because I haven't coordinates to clip, but the process still the same as in case when I have nan in bbox list. Now I use an empty list for negative sample images. The question is open, please help. |

full tracebackAssertionError Traceback (most recent call last) ~/detectron2_repo/detectron2/engine/defaults.py in train(self) ~/detectron2_repo/detectron2/engine/train_loop.py in train(self, start_iter, max_iter) ~/detectron2_repo/detectron2/engine/train_loop.py in run_step(self) ~/.local/lib/python3.6/site-packages/torch/utils/data/dataloader.py in next(self) ~/.local/lib/python3.6/site-packages/torch/utils/data/dataloader.py in _process_data(self, data) ~/.local/lib/python3.6/site-packages/torch/_utils.py in reraise(self) AssertionError: Caught AssertionError in DataLoader worker process 1. |

❓ Questions and Help

I'm working with satellite imagery where some image tiles have over 100 objects to segment and some images have none (these are negative samples). The negative samples still provide information on what is not a segmentation (in my case, types of agriculture land cover). This issue on another facebookresearch repo seems to indicate that negative samples can be very useful but that they are not always supported out of the box: facebookresearch/maskrcnn-benchmark#169

Does this repo support negative samples? Does it depend on the maskrcnn model I pretrain from in the model zoo?

The text was updated successfully, but these errors were encountered: